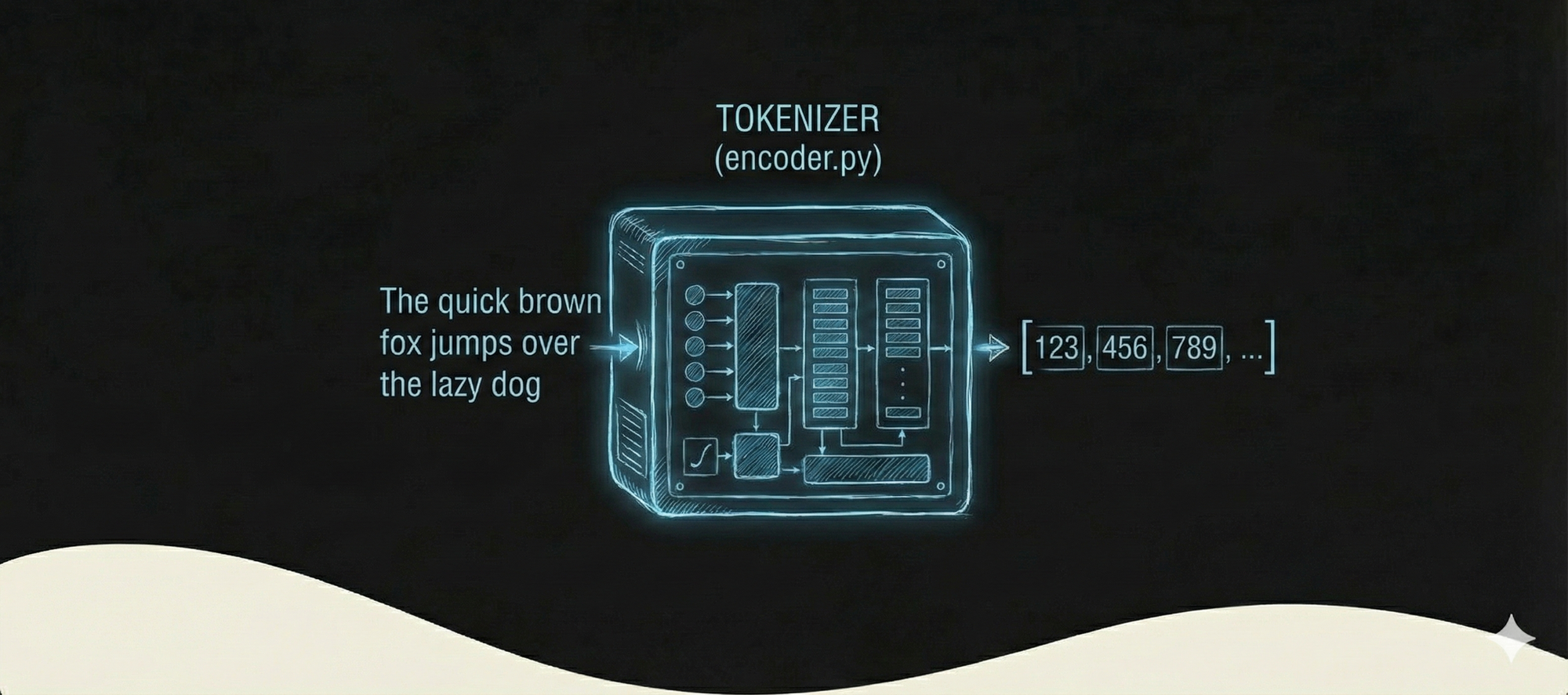

GPT-2 Source Code Notes, Part 1: The Tokenizer (encoder.py)

GPT-2’s tokenizer is surprisingly readable. This article walks through how the reversible bytes to unicode mapping and BPE merge list actually work in practice.

GPT-2’s tokenizer is surprisingly readable. This article walks through how the reversible bytes to unicode mapping and BPE merge list actually work in practice.

I read through encoder.py. It is compact and understandable. The core ideas are: a reversible bytes to unicode bridge, a regex pre-split of text, and a ranked list of BPE merges.

Bytes to Unicode mapping

@lru_cache()

def bytes_to_unicode():

"""

Returns list of utf-8 byte and a corresponding list of unicode strings.

The reversible bpe codes work on unicode strings.

This means you need a large number of unicode characters in your vocab if you want to avoid UNKs.

When you are at something like a 10B token dataset you end up needing around 5K for decent coverage.

To avoid that, we want lookup tables between utf-8 bytes and unicode strings.

"""

This function maps raw bytes 0-255 into printable unicode codepoints. This makes the system fully reversible. No unknown token is needed because any sequence of bytes can be represented.

Fully reversible decode

def decode(self, tokens):

text = ''.join([self.decoder[token] for token in tokens])

text = bytearray([self.byte_decoder[c] for c in text]).decode('utf-8', errors=self.errors)

return text

Token ids map to string symbols. Those map back to bytes. Then UTF-8 decode. This is why GPT-2 never loses information between tokenization and decoding.

Loading merges and the codebook

def get_encoder(model_name, models_dir):

with open(os.path.join(models_dir, model_name, 'encoder.json'), 'r') as f:

encoder = json.load(f)

with open(os.path.join(models_dir, model_name, 'vocab.bpe'), 'r', encoding="utf-8") as f:

bpe_data = f.read()

bpe_merges = [tuple(merge_str.split()) for merge_str in bpe_data.split('\n')[1:-1]]

return Encoder(

encoder=encoder,

bpe_merges=bpe_merges,

)

encoder.json is the token to id dictionary. vocab.bpe is the ranked merge list the tokenizer uses.

How BPE merging actually behaves

Example for intuition only (not the real GPT-2 merges):

Merges learned in this order:

- l o becomes lo

- lo w becomes low

- e r becomes er

- low er becomes lower

Encoding "lower" goes like this mentally:

l o w e r

lo w e r

low e r

low er

lower

Common words collapse to a single piece. Rare ones remain multiple pieces. BPE is just repeated merging based on a learned priority ranking.

Why this file is important

This tokenizer is small enough and readable enough that you can see how subword tokenization actually works. If someone wants to understand why models behave differently depending on vocabulary, this file is a good entry point. Understanding tokenization also makes it easier to reason about context length, cost, and sampling behavior later.